How Traffic Flows in Istio

Note - this post is closely related to Understanding Istio's Networking API's. If unfamiliar with Gateways, VirtualServices, DestinationRules, and ServiceEntries, it may be a good idea to read that blog post as well.

As the data-plane service proxy, Envoy intercepts all incoming and outgoing requests at runtime (as traffic flows through the service mesh). This interception is done transparently via iptables rules or a Berkeley Packet Filter (BPF) program that routes all network traffic, in and out through Envoy.

Envoy inspects the request and uses the request’s hostname, SNI, or service virtual IP address to determine the request’s target (the service to which the client is intending to send a request). Envoy then applies that target’s routing rules to determine the request’s destination (the service to which the service proxy is actually going to send the request). Then, having determined the destination, Envoy applies the destination’s rules.

Destination rules include load-balancing strategy, which are used to pick an endpoint (the endpoint is the address of a worker supporting the destination service). Services generally have more than one worker available to process requests and requests can be balanced across those workers. Finally, Envoy forwards the intercepted request to the endpoint.

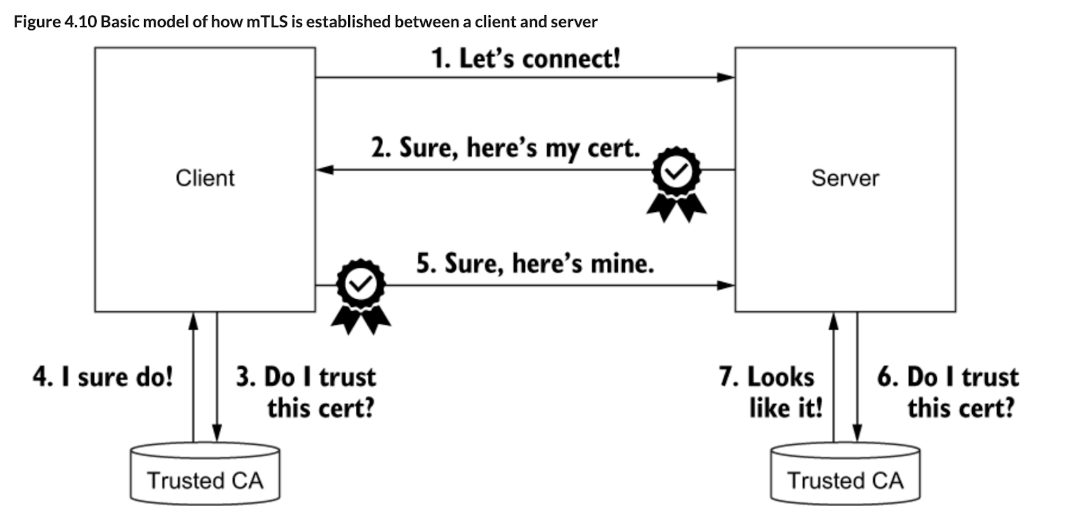

A number of items of note are worth further illumination. First, it is desirable to have your applications speak cleartext (communicate without encryption) to the sidecarred service proxy and let the service proxy handle transport security. This does not mean all intra-mesh traffic is unenencryped, quite the opposite. All traffic in the Service Mesh is encrypted, via mTLS, where the sidecarred service proxy handles the TLS connection. Just that, once intercepted by the sidecar, the TLS connection is terminated and routes to the application via HTTP.

For example, your application can speak HTTP to the sidecar and let the sidecar handle the upgrade to HTTPS. This allows the service proxy to gather L7 metadata about requests, which allows Istio to generate L7 metrics and manipulate traffic based on L7 policy. Without the service proxy performing TLS termination, Istio can generate metrics for and apply policy on only the L4 segment of the request, restricting policy to contents of the IP packet and TCP header (essentially, a source and destination address and port number).

Second, we get to perform client-side load balancing rather than relying on traditional load balancing via reverse proxies. Client-side load balancing means that we can establish network connections directly from clients to servers while still maintaining a resilient, well-behaved system. That in turn enables more efficient network topologies with fewer hops than traditional systems that depend on reverse proxies.

Typically, Pilot has detailed endpoint information about services in the registry, which it pushes directly to the service proxies. So, unless you configure the service proxy to do otherwise, at runtime it selects an endpoint from a static set of endpoints pushed to it by Pilot and does not perform dynamic address resolution (e.g., via DNS) at runtime. Therefore, the only things Istio can route traffic to are hostnames in Istio’s service registry. There is an installation option in newer versions of Istio (set to “off” by default in 1.1) that changes this behavior and allows Envoy to forward traffic to unknown services that are not modeled in Istio, so long as the application provides an IP address.

Hostnames are the core of Istio’s networking model, and Istio’s networking APIs allow you to create hostnames to describe workloads and control how traffic flows to them. Applications address services by name (e.g., by hostname resolved via DNS) to avoid the fragility of addressing services by IP address (an address that might not be initially known, that might change at any time, is difficult to remember, and might modulate between v4 and v6 addresses depending on its environment).

Consequently, Istio’s network configuration has adopted a name-centric model, in which:

- Gateways expose names.

- VirtualServices configure and route names.

- DestinationRules describe how to communicate with the workloads behind a name.

- ServiceEntrys enable the creation of new names.

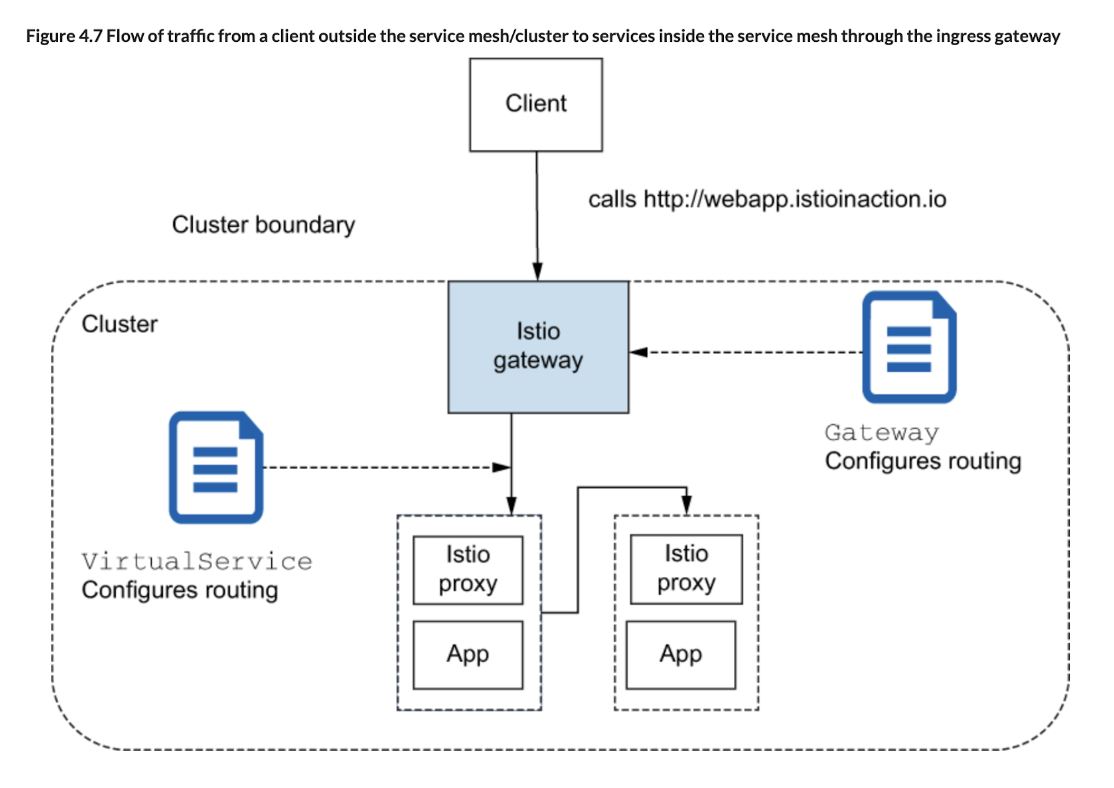

The Gateway resource defines ports, protocols, and virtual hosts that we wish to listen for at the edge of our service-mesh cluster. VirtualService resources define where traffic should go once it’s allowed in at the edge.

When running on Kubernetes, you may ask, “Why doesn’t Istio just use the Kubernetes Ingress v1 resource to specify ingress?” Istio does support the Kubernetes Ingress v1 resource, but there are significant limitations.

The first limitation is that Kubernetes Ingress v1 is a very simple specification geared toward HTTP workloads. There are implementations of Kubernetes Ingress (like Nginx and Traefik); however, each is geared toward HTTP traffic. In fact, the Ingress specification only considers port 80 and port 443 as ingress points. This severely limits the types of traffic a cluster operator can allow into the service mesh. For example, if you have Kafka or NATS.io workloads, you may wish to expose direct TCP connections to these messaging systems. Kubernetes Ingress doesn’t allow for that.

Second, the Kubernetes Ingress v1 resource is severely underspecified. There is no common way to specify complex traffic routing rules, traffic splitting, or things like traffic shadowing. The lack of specification in this area causes each vendor to reimagine how best to implement configurations for each type of Ingress implementation (HAProxy, Nginx, and so on).

Finally, since things are underspecified, most vendors have chosen to expose configuration through bespoke annotations on deployments. The annotations between vendors vary and are not portable, and if Istio had continued that trend, there would have been many more annotations to account for all the power of Envoy as an edge gateway.

Ultimately, Istio decided on a clean slate for building ingress patterns and specifically separated out the layer 4 (transport) and layer 5 (session) properties from the layer 7 (application) routing concerns. Istio Gateway handles the L4 and L5 concerns, while VirtualService handles the L7 concerns. Many mesh and gateway providers have also built their own APIs for ingress, and the Kubernetes community is working on a revised Ingress API.

A Gateway can route HTTPS traffic and present certain certificates depending on the SNI hostname. It can also route TCP traffic based on SNI hostname without terminating the traffic on the Istio ingress gateway. All the gateway will do is inspect the SNI headers and route the traffic to the specific backend, which will then terminate the TLS connection. The connection will “pass through” the gateway and be handled by the actual service, not the gateway.

Because the LB is an internal L4 Lb, and setup as PASSTHROUGH using the SNI. It knows which service the client is trying to access and which certificate corresponds with that service with an extension to TLS called Server Name Indication (SNI). Basically, when an HTTPS connection is created, the client first identifies which service it’s trying to reach using the ClientHello part of the TLS handshake. Istio’s gateway (Envoy, specifically) implements SNI on TLS, which is how it can present the correct cert and route to the correct service.

Written: October 14, 2024