How Traffic Flows in Istio

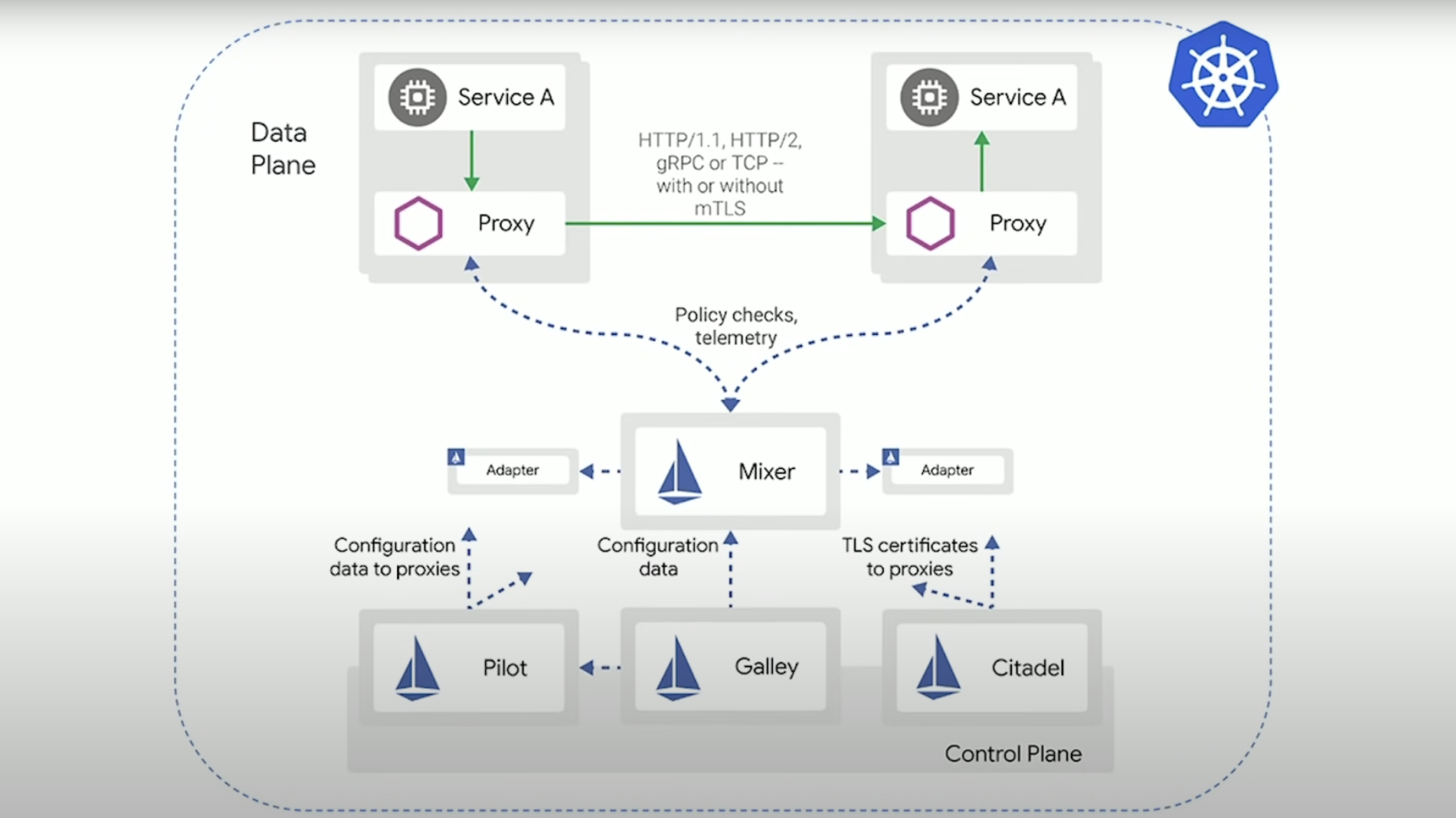

To understand how Istio’s networking APIs work, it’s important to understand how requests actually flow through Istio. Pilot (the Istio Control Plane) understands the topology of the Service Mesh and uses this knowledge, along with additional Istio networking configurations that you provide, to configure the Mesh’s service proxies.

As the data-plane service proxy, Envoy intercepts all incoming and outgoing requests at runtime (as traffic flows through the service mesh). This interception is done transparently via iptables rules or a Berkeley Packet Filter (BPF) program that routes all network traffic, in and out through Envoy. Envoy inspects the request and uses the request’s hostname, SNI, or service virtual IP address to determine the request’s target (the service to which the client is intending to send a request). Envoy applies that target’s routing rules to determine the request’s destination (the service to which the service proxy is actually going to send the request). Having determined the destination, Envoy applies the destination’s rules. Destination rules include load-balancing strategy, which is used to pick an endpoint (the endpoint is the address of a worker supporting the destination service). Services generally have more than one worker available to process requests. Requests can be balanced across those workers. Finally, Envoy forwards the intercepted request to the endpoint.

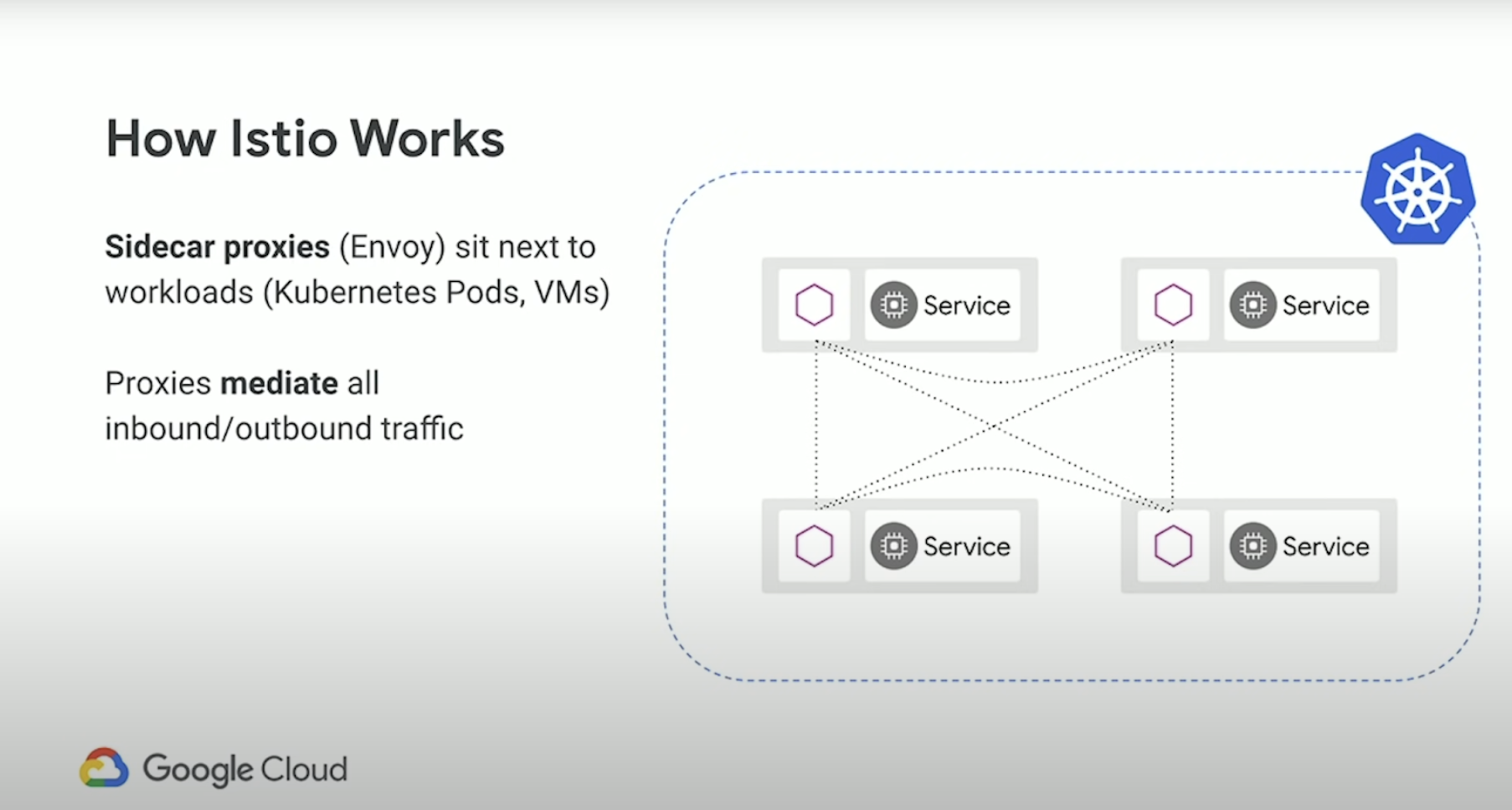

The way it works is though a set of Sidecar proxies, so this is the data plane. In the above example we have one Kubernetes cluster and set a pods (gray boxes) and inside each set of pods we have 2 containers. Remember in Kubernetes, a pod can contain one or more containers. And the thing about a pod is that they share the same Linux network namespace. What this is in that container, in a pod, can talk to other containers in the same pod over localhost. This is how the magic of Istio works, and what happens is you use Istio to inject proxies into your workloads, and then all subsequent traffic inbound and outbound goes through those proxies.

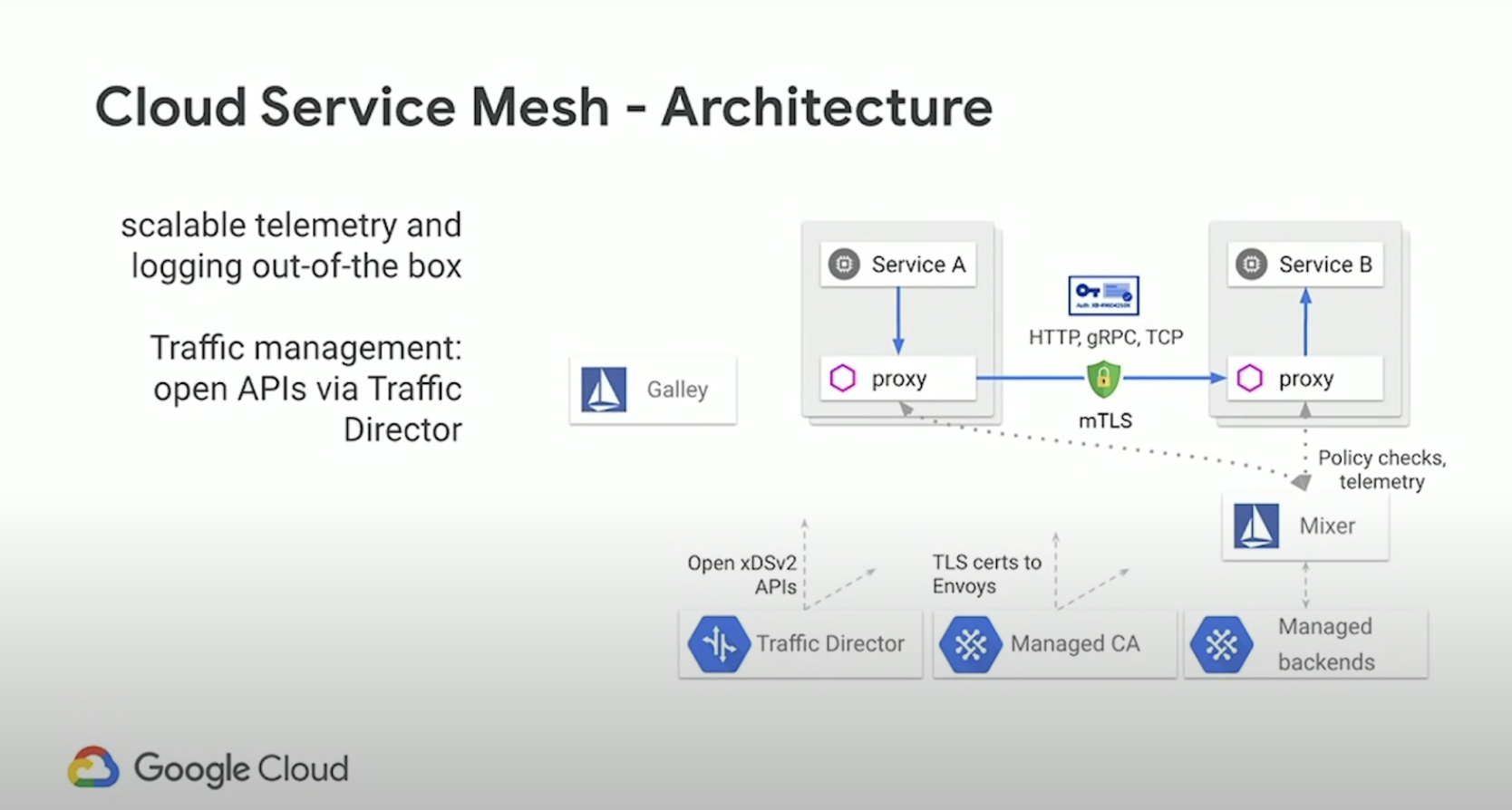

Put it all together and at the top we have the data plane, and at bottom we have control plane. Key thing to note is that the Envoy sidecars can handle multiple different types of traffic - note again that this is layer 7, so we are talking HTTP, gRPC, that layer - the application layer. And all running in Kubernetes. Extra side note - You can run workloads in VMs as well, Istio can handle it.

Quick Overview of Envoy

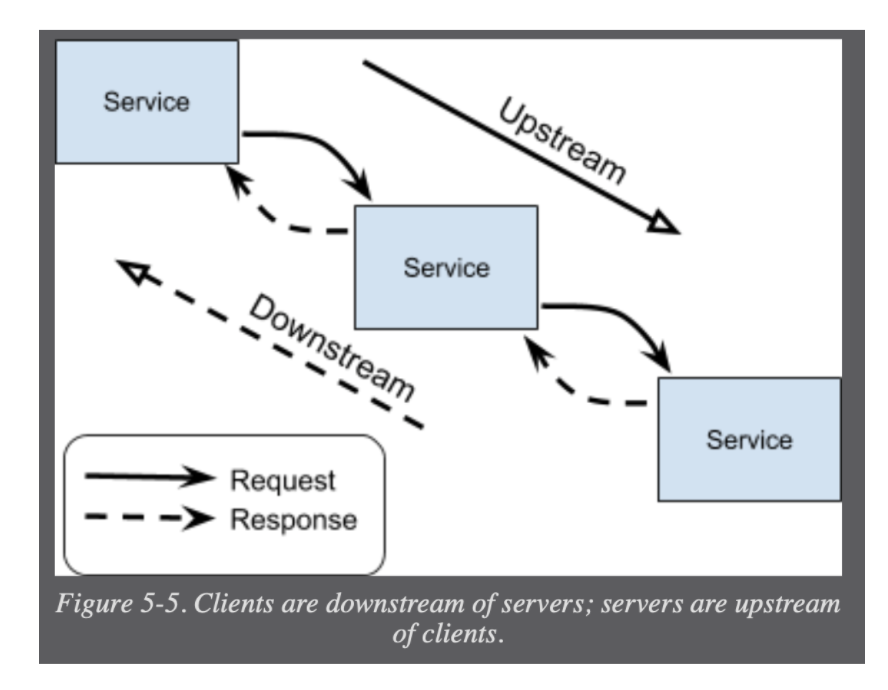

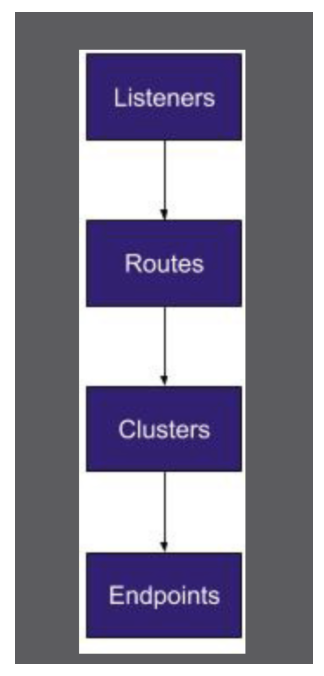

Like other service proxies, Envoy uses network listeners to ingest traffic. The terms upstream and downstream describe the direction of a chain of dependent service requests.

A listener is a named network location (e.g., port, unix domain socket, etc.) that can accept connections from downstream clients.

You can configure listeners, routes, clusters, and endpoints with static files or dynamically through their respective APIs: listener discovery service (LDS), route discovery service (RDS), cluster discovery service (CDS), and endpoint discovery service (EDS).

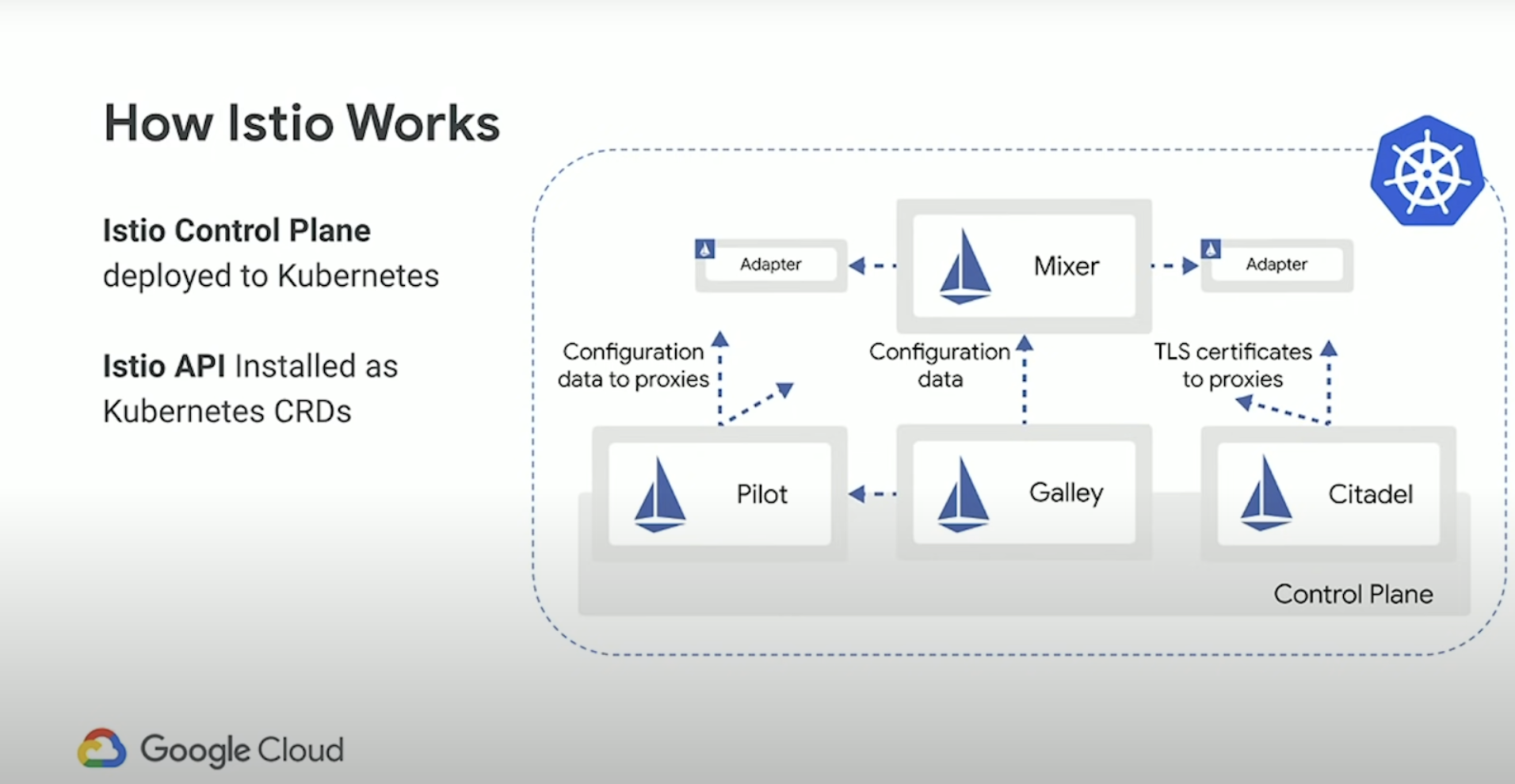

Istio Control Plane Components

There are (4) main Control Plane components of Istio:

- Pilot - The way you indirectly configure traffic is through Pilot. Pilot pushes your config - your traffic rules - down to the Envoy Proxies. In the form of filters. You the operator are never interacting with these proxies, you talk to Istio - the control plane.

- Citidel - Cert management (for TLS and mTLS)

- Mixer - Telemetry

- Galley - Istio’s configuration aggregation and distribution component.

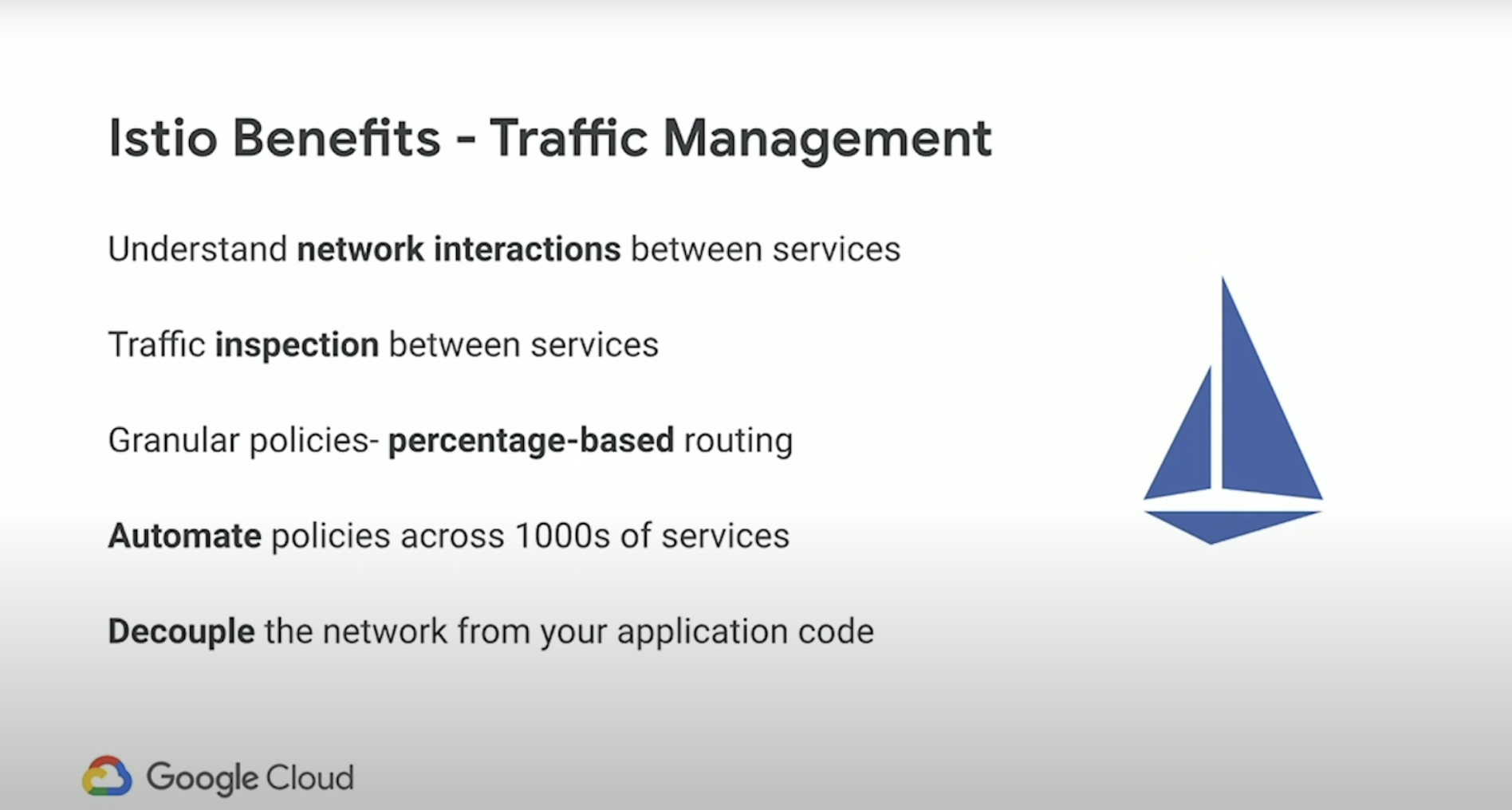

What's the added valueIt is not the world's most easy thing to just just deploy it, so what does it get you? A whole lot for traffic management.

- Basic visibility into which micro service is calling which.

- Traffic inspection - taking individual requests and making decisions based on things like HTTP headers.

- Granular percentage based routing - So the thing about a plain old Kubernetes service, is that you are limited by your workload scaling. What I mean by that is that say you have a kubernetes service, and behind that sits a bunch of pods - and you want to do Canary deployment. And you have let's say one pod per deployment. The best you could do is 50/50. There would be no way in plain old Kubernetes to say: “I want 1% of traffic to go to that new Canary version so I could rollback really quickly if something goes wrong. “

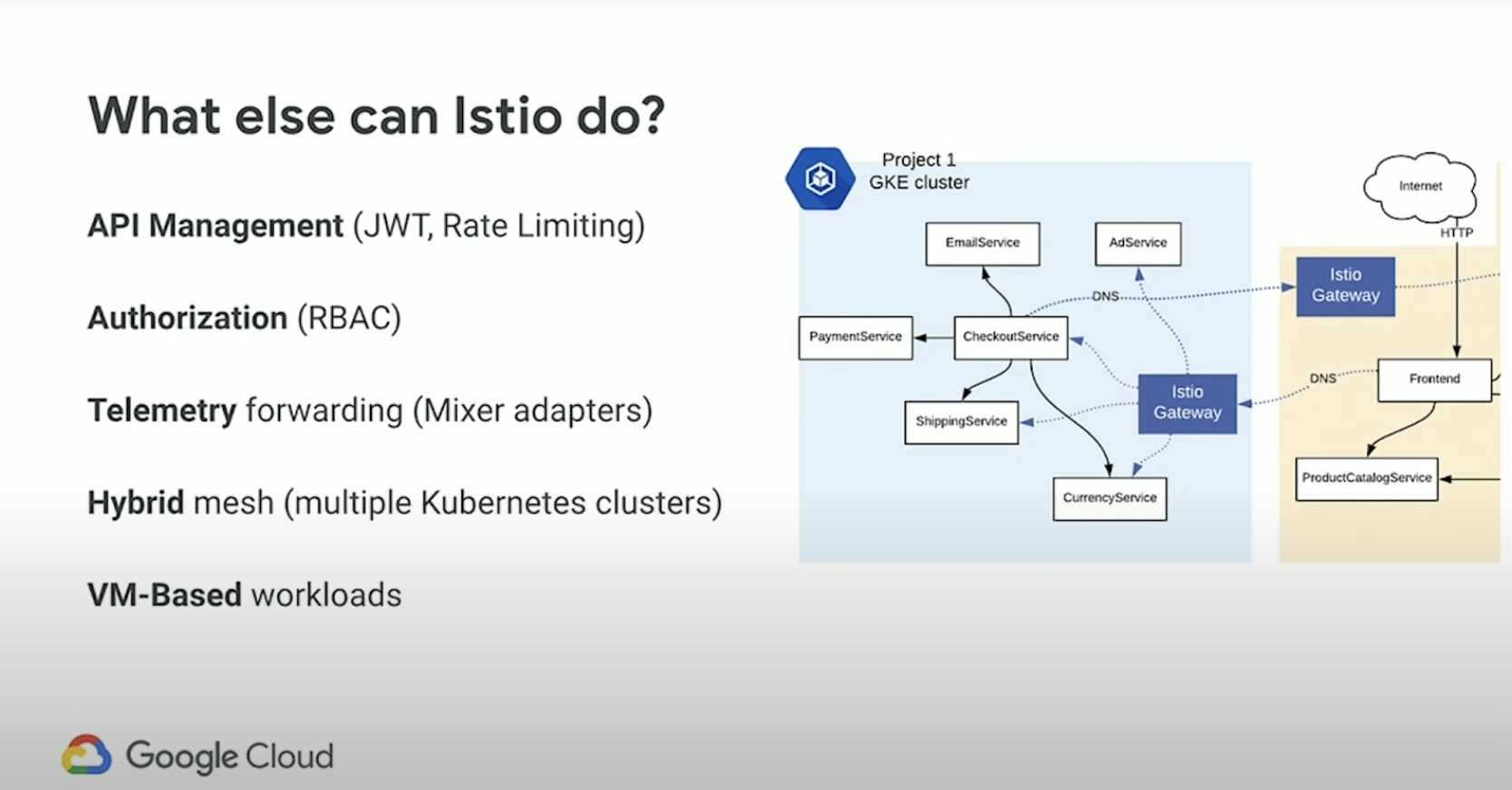

- Automation - because Istio exposes APIs that you can add config files (yamls) to, and you can use all of the same tooling you’d use using plain Kubernetes, you can do essential automation that you could not do before. So for example, turn on mTLS end to end encryption for your entire mesh with a single rule. Decouple network from application code - with Istio no longer have to have developers think about non-application or business logic - like firewall rules, retry logic, security logic, metrics, tracing, etc….Take all that network logic and put it in the hands of the operators - so you can make decisions in a unified way.

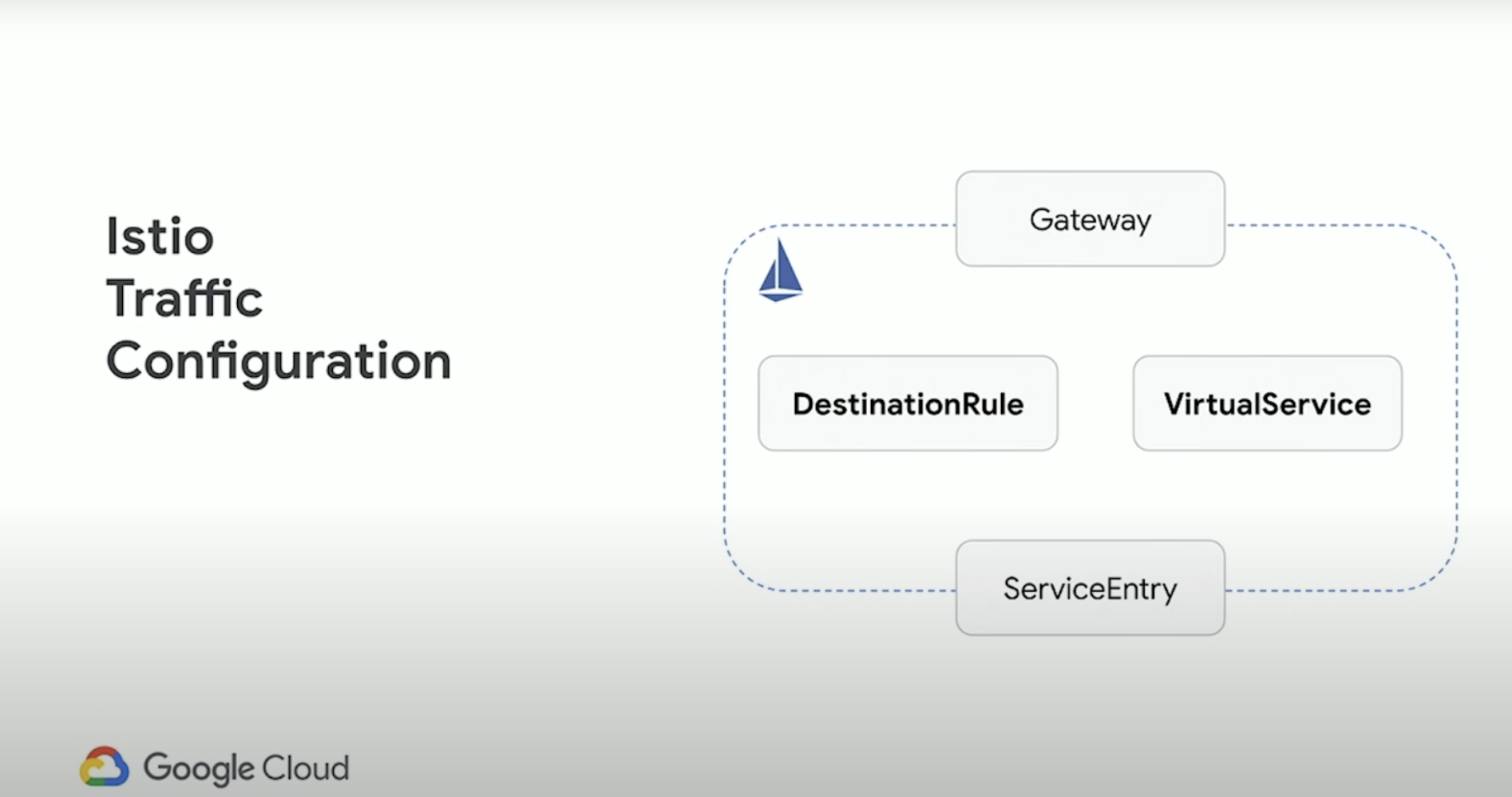

Istio’s Networking APIs - Traffic Management

- Gateway - Manage inbound and outbound traffic for your mesh, letting you specify which traffic you want to enter or leave the mesh.

- VirtualService - Lets you configure how requests are routed to a service within an Istio service mesh, building on the basic connectivity and discovery provided by Istio and your platform.

- DestinationRule - A way to group a service into subsets. Or in other words, you can take a Kubernetes service, and partition it however you want.

- ServiceEntry - Use a service to add an entry to the service registry that Istio maintains internally. After you add the service entry, the Envoy proxies can send traffic to the service as if it was a service in your mesh.

VirtualService

Virtual services, along with destination rules, are the key building blocks of Istio’s traffic routing functionality. A virtual service lets you configure how requests are routed to a service within an Istio service mesh, building on the basic connectivity and discovery provided by Istio and your platform. Each virtual service consists of a set of routing rules that are evaluated in order, letting Istio match each given request to the virtual service to a specific real destination within the mesh. Your mesh can require multiple virtual services or none depending on your use case.

Virtual services play a key role in making Istio’s traffic management flexible and powerful. They do this by strongly decoupling where clients send their requests from the destination workloads that actually implement them. Virtual services also provide a rich way of specifying different traffic routing rules for sending traffic to those workloads. Why is this so useful? Without virtual services, Envoy distributes traffic using round-robin load balancing between all service instances, as described in the introduction. You can improve this behavior with what you know about the workloads. For example, some might represent a different version. This can be useful in A/B testing, where you might want to configure traffic routes based on percentages across different service versions, or to direct traffic from your internal users to a particular set of instances. With a virtual service, you can specify traffic behavior for one or more hostnames. You use routing rules in the virtual service that tell Envoy how to send the virtual service’s traffic to appropriate destinations. Route destinations can be versions of the same service or entirely different services.

Hostnames are the core of Istio’s networking model, and Istio’s networking APIs allow you to create hostnames to describe workloads and control how traffic flows to them.

DestinationRule

Along with virtual services, destination rules are a key part of Istio’s traffic routing functionality. You can think of virtual services as how you route your traffic to a given destination, and then you use destination rules to configure what happens to traffic for that destination. Destination rules are applied after virtual service routing rules are evaluated, so they apply to the traffic’s “real” destination.

In particular, you use destination rules to specify named service subsets, such as grouping all a given service’s instances by version. You can then use these service subsets in the routing rules of virtual services to control the traffic to different instances of your services.

Destination rules also let you customize Envoy’s traffic policies when calling the entire destination service or a particular service subset, such as your preferred load balancing model, TLS security mode, or circuit breaker settings. You can see a complete list of destination rule options in the Destination Rule reference.

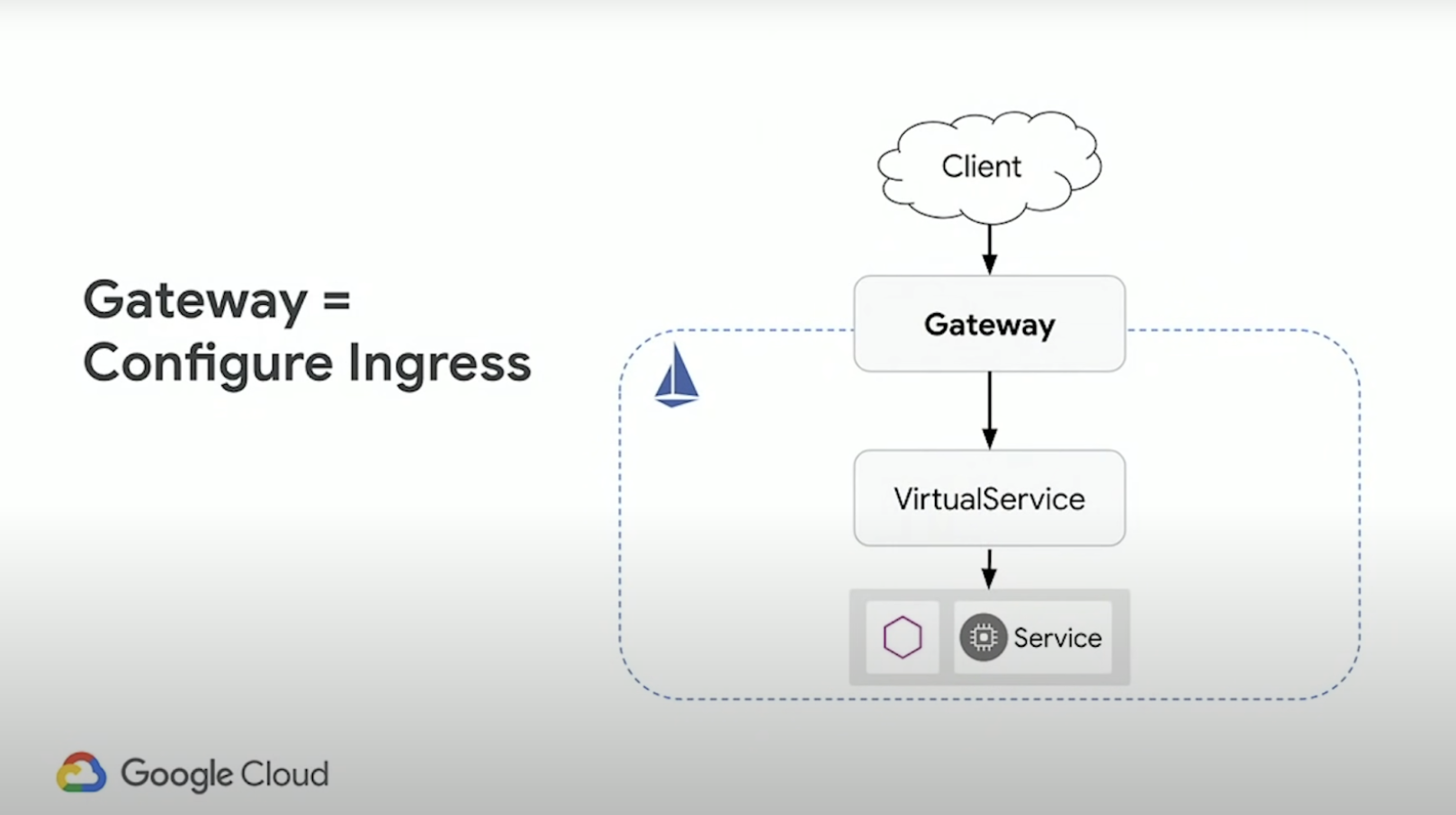

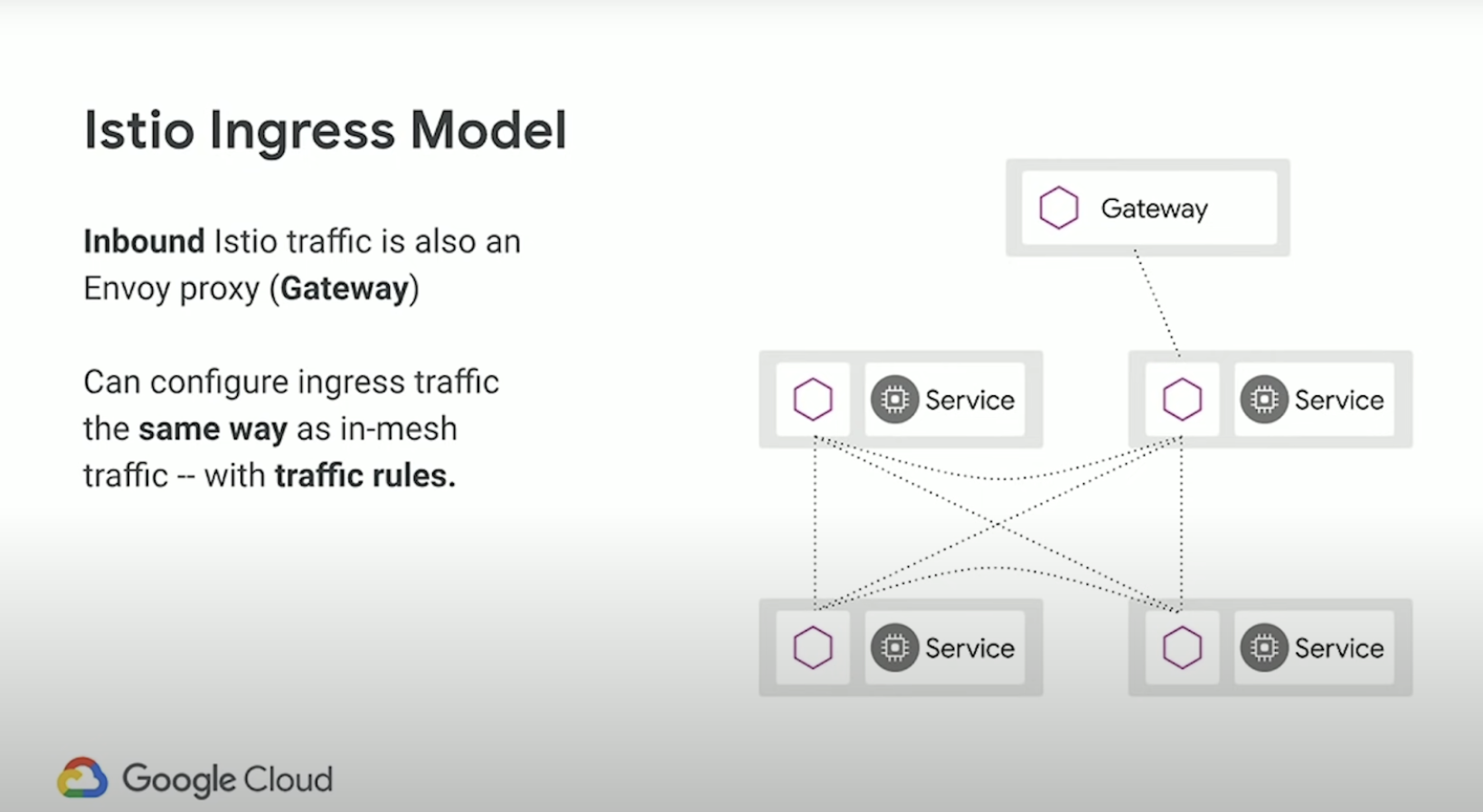

Ingress (North/South) Gateway

You use a gateway to manage inbound and outbound traffic for your mesh, letting you specify which traffic you want to enter or leave the mesh. Gateway configurations are applied to standalone Envoy proxies that are running at the edge of the mesh, rather than sidecar Envoy proxies running alongside your service workloads.

Unlike other mechanisms for controlling traffic entering your systems, such as the Kubernetes Ingress APIs, Istio gateways let you use the full power and flexibility of Istio’s traffic routing. You can do this because Istio’s Gateway resource just lets you configure layers 4 thorugh 6 load balancing properties such as ports to expose, TLS settings, and so on. Then instead of adding application-layer traffic routing (L7) to the same API resource, you bind a regular Istio Virtual Service to the Gateway. This lets you manage Gateway traffic like any other data plane traffic in an Istio mesh.

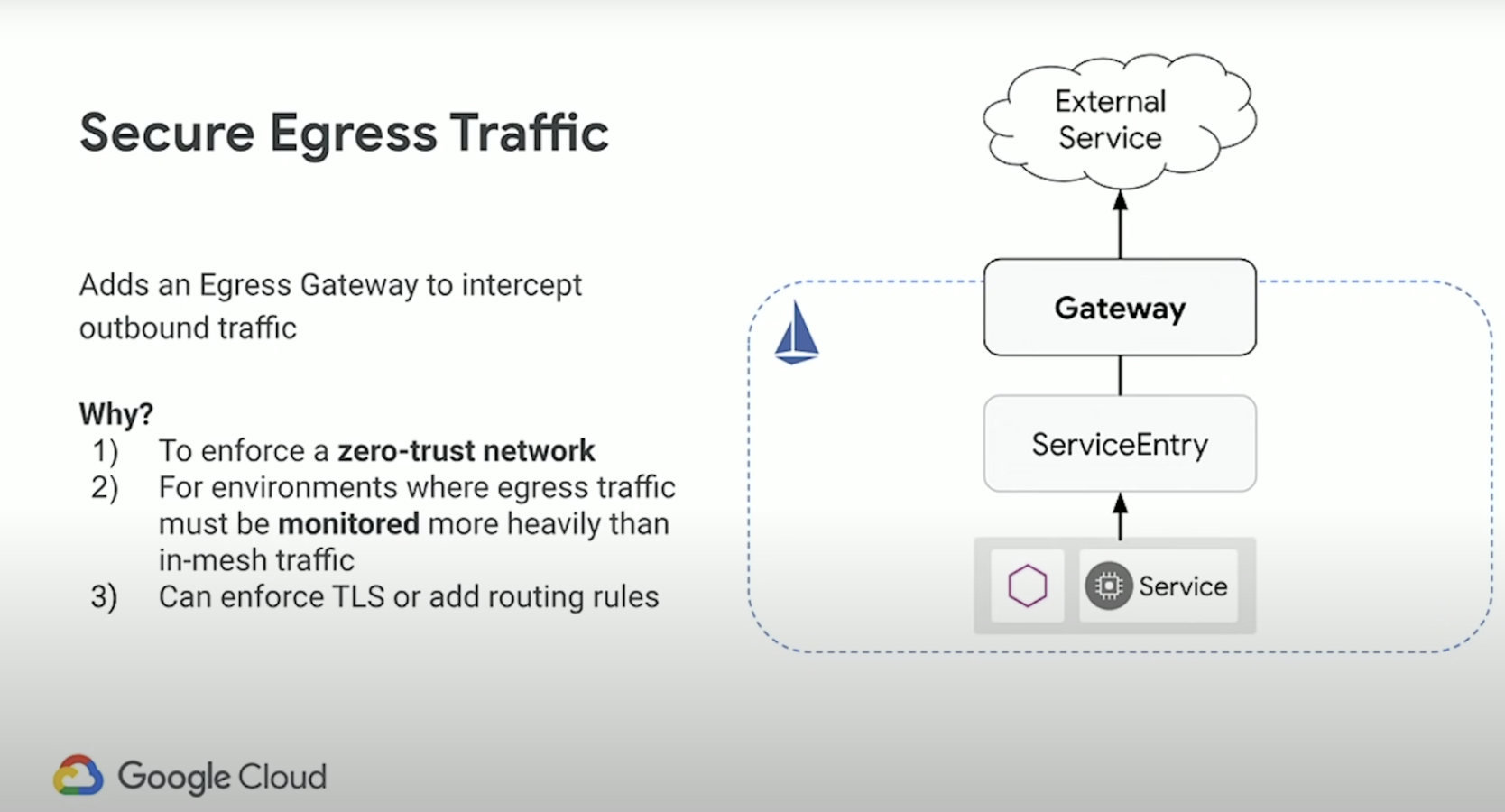

Gateways are primarily used to manage ingress traffic, but you can also configure egress gateways. An egress gateway lets you configure a dedicated exit node for the traffic leaving the mesh, letting you limit which services can or should access external networks, or to enable secure control of egress traffic to add security to your mesh, for example. You can also use a gateway to configure a purely internal proxy.

Important to note that originally the default installation of Istio had an Egress Gateway enabled, but now with newer versions, it is disabled by default. If you wish to install an Egress Gateway, you must enable it explicitly.

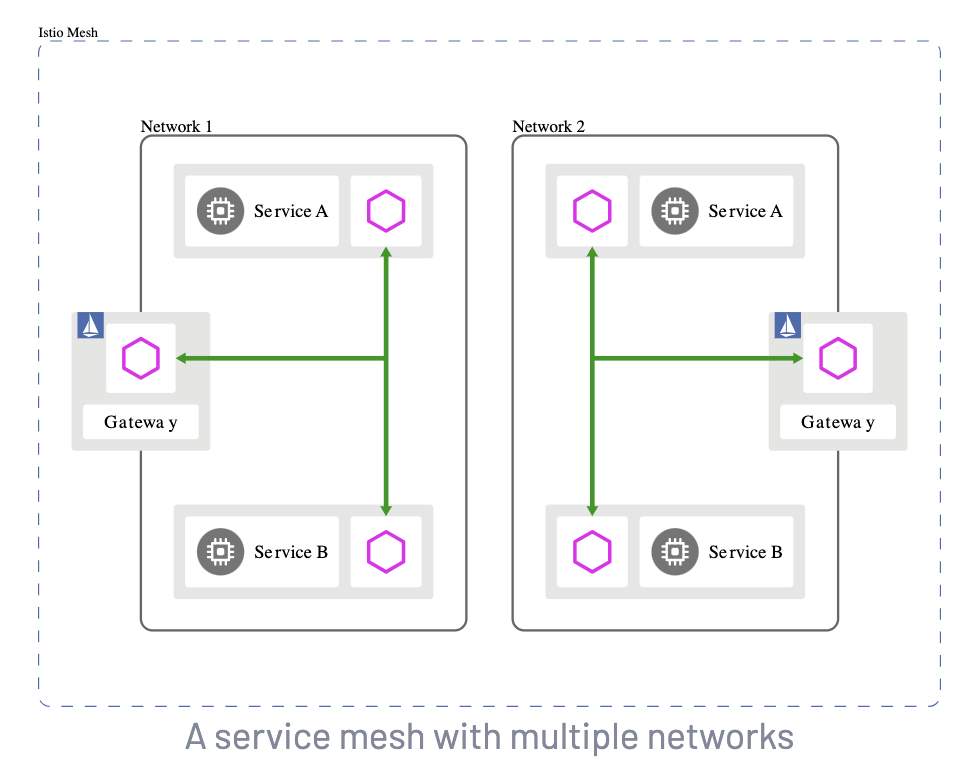

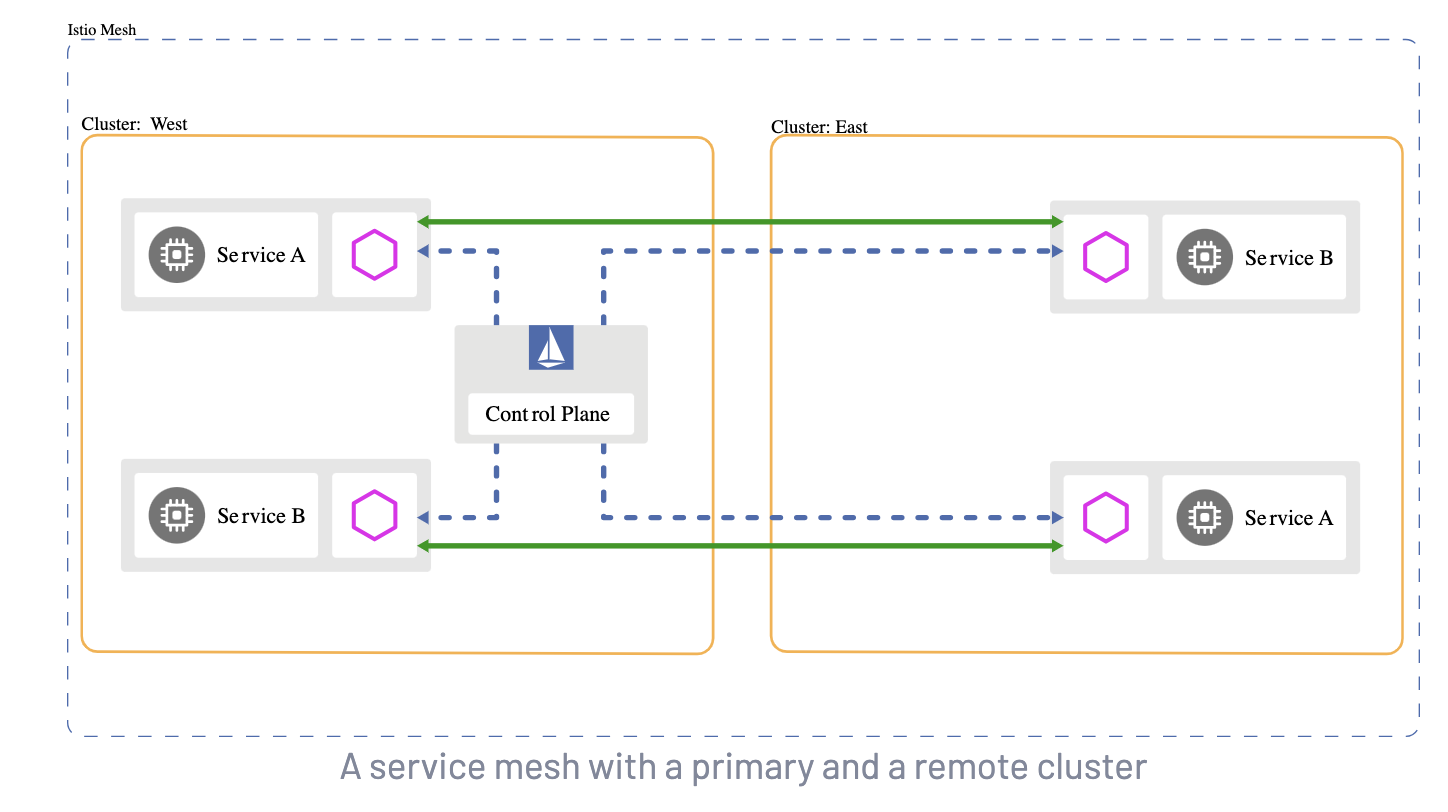

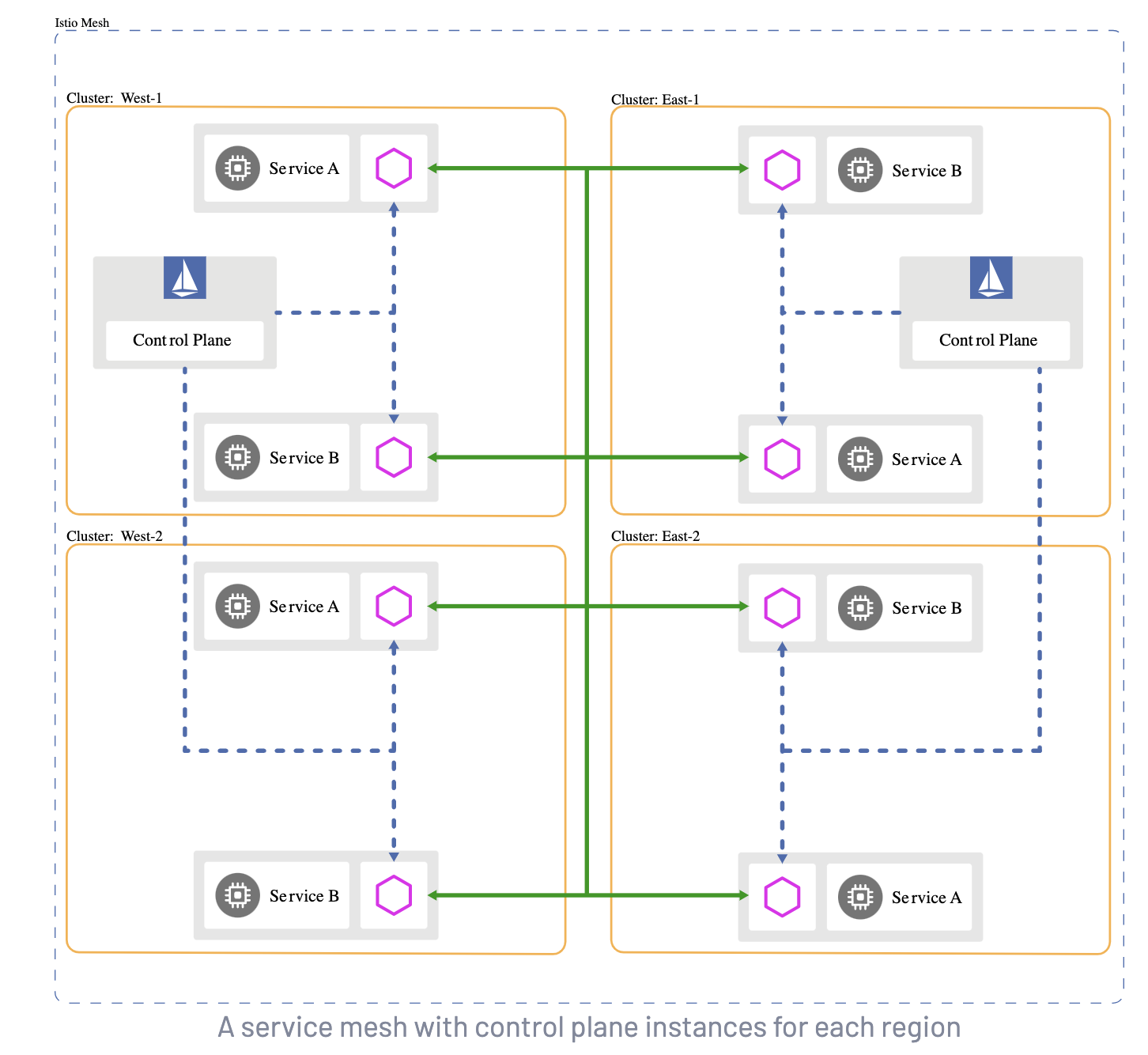

EastWest Gateway

An EastWest Gateway is used to manage intra-mesh traffic and is (usually) an SNI Passthrough LoadBalancer; where as a Ingress Gateway is usually a TLS (HTTPS) reverse proxy Loadbalancer that terminates the TLS connection. An EastWest Gateway will be necessary if you are running a multi-cluster Service Mesh, or a multi network Service Mesh. To read more about this, see the Istio docs here: Install MultiCluster.

Istio uses the SPIFFE standard, (Secure Production Identity Framework for Everyone), which is a set of open-source standards for securely identifying software systems in dynamic and heterogeneous environments. Istio will verify the workload and trust domain using SPIFFE, and use mTLS to securely proxy traffic through the EastWest Gateway.

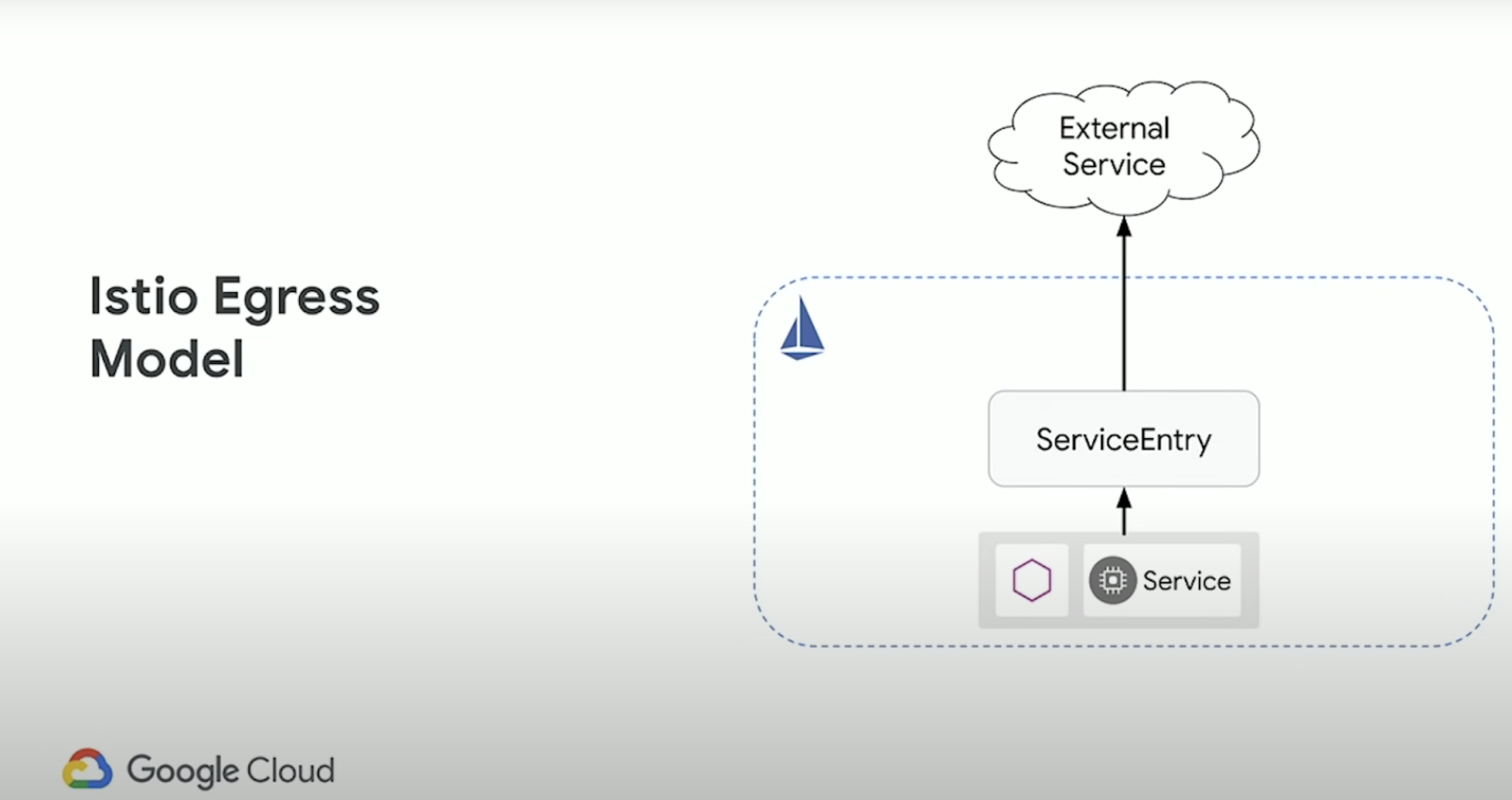

Egress Traffic

ServiceEntry

You use a service entry to add an entry to the service registry that Istio maintains internally. After you add the service entry, the Envoy proxies can send traffic to the service as if it was a service in your mesh. Configuring service entries allows you to manage traffic for services running outside of the mesh, including the following tasks:

- Redirect and forward traffic for external destinations, such as APIs consumed from the web, or traffic to services in legacy infrastructure.

- Define retry, timeout, and fault injection policies for external destinations.

- Run a mesh service in a Virtual Machine (VM) by adding VMs to your mesh.

You don’t need to add a service entry for every external service that you want your mesh services to use. By default, Istio configures the Envoy proxies to passthrough requests to unknown services. However, you can’t use Istio features to control the traffic to destinations that aren’t registered in the mesh.

Other Istio Features

Resiliency

A resilient system is one that can maintain good performance for its users (i.e., staying within its SLOs) while coping with failures in the downstream systems on which it depends. Istio provides a lot of features to help build more resilient applications; most important being client-side load balancing, circuit breaking via outlier detection, automatic retry, and request timeouts. Istio also provides tools to inject faults into applications, allowing you to build programmatic, reproducible tests of your system’s resiliency.

Load-Balancing Strategy

Client-side load balancing is an incredibly valuable tool for building resilient systems. By allowing clients to communicate directly with servers without going through reverse proxies, we remove points of failure while still keeping a well-behaved system. Further, it allows clients to adjust their behavior dynamically based on responses from servers; for example, to stop sending requests to endpoints that return more errors than other endpoints of the same service (we cover this feature, outlier detection, more in the next section). DestinationRules allow you to define the load-balancing strategy clients use to select backends to call. “This DestinationRule sends traffic round robin across the endpoints of the service foo.default.svc.cluster.local. A ServiceEntry defines what those endpoints are (or how to discover them at runtime; e.g., via DNS). It’s important to note that a DestinationRule applies only to hosts in Istio’s service registry. If a ServiceEntry does not exist for a host, the DestinationRule is ignored. More complex load-balancing strategies such as consistent hash-based load balancing are also supported.

Outlier Detection

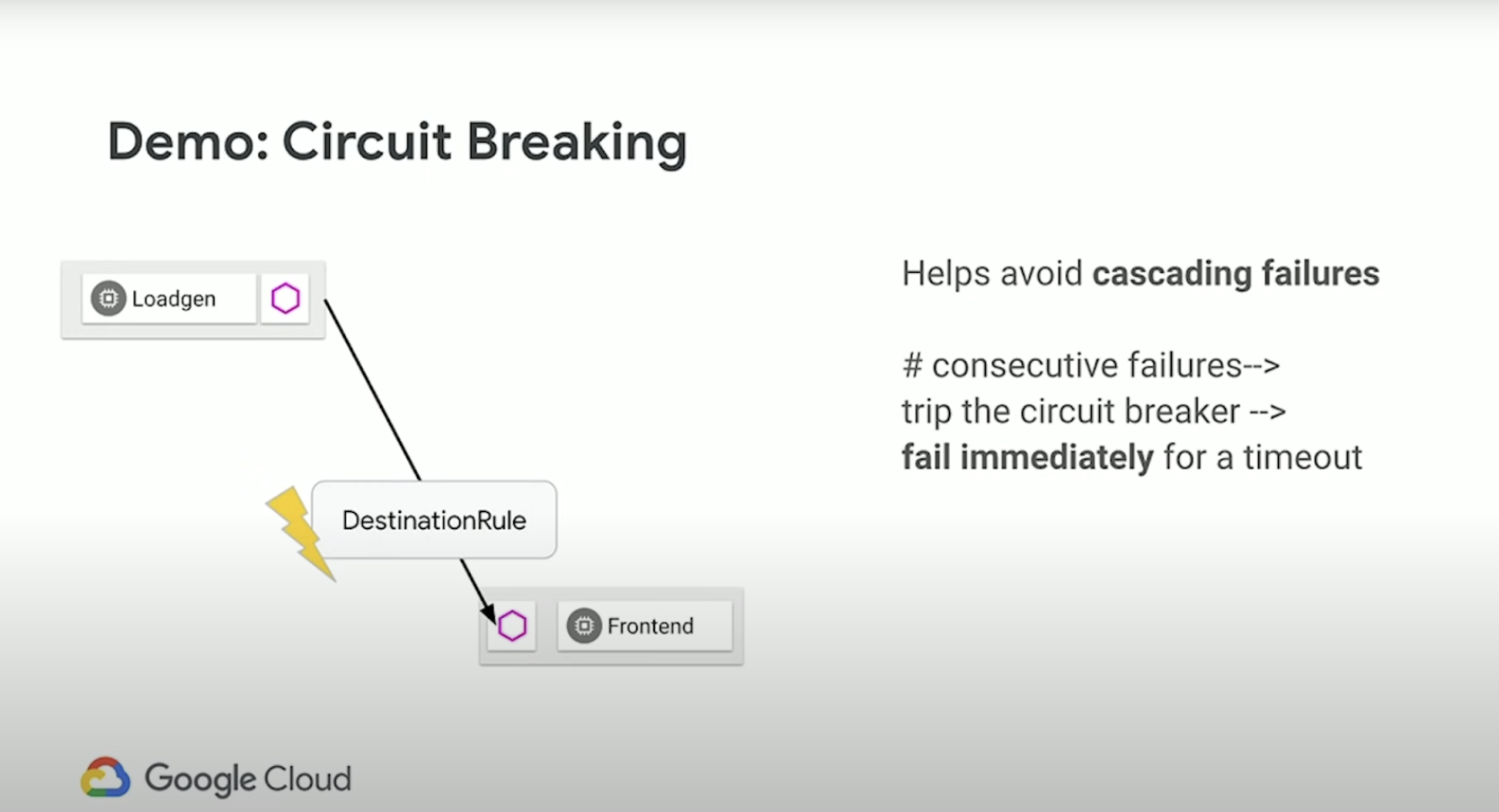

Circuit breaking is a pattern of protecting calls (e.g., network calls to a remote service) behind a “circuit breaker.” If the protected call returns too many errors, we “trip” the circuit breaker and return errors to the caller without executing the protected call. This can be used to mitigate several classes of failure, including cascading failures. In load balancing, to “lame-duck” an endpoint is to remove it from the “active” load-balancing set so that no traffic is sent to it for some period of time. Lame-ducking is one method that we can use to implement the circuit-breaker pattern. Outlier detection is a means of triggering lame-ducking of endpoints that are sending bad responses. We can detect when an individual endpoint is an outlier compared to the rest of the endpoints in our “active” load-balancing set (i.e., returning more errors than other endpoints of the service) and remove the bad endpoint from our “active” load-balancing set.

Retries

Every system has transient failures: network buffers overflow, a server shutting down drops a request, a downstream system fails, and so on. We use retries—sending the same request to a different endpoint of the same service—to mitigate the impact of transient failures. However, poor retry policies are a frequent secondary cause of outages: “Something went wrong, and client retries made it worse,” is a common refrain. Often this is because retries are hardcoded into applications (e.g., as a for loop around the network call) and therefore are difficult to change. Istio gives you the ability to configure retries globally for all services in your mesh. More significant, it allows you to control those retry strategies at runtime via configuration, so you can change client behavior on the fly.

Timeouts

Timeouts are important for building systems with consistent behavior. By attaching deadlines to requests, we’re able to abandon requests taking too long and free server resources. We’re also able to control our tail latency much more finely, because we know the longest that we’ll wait for any particular request in computing our response for a client.

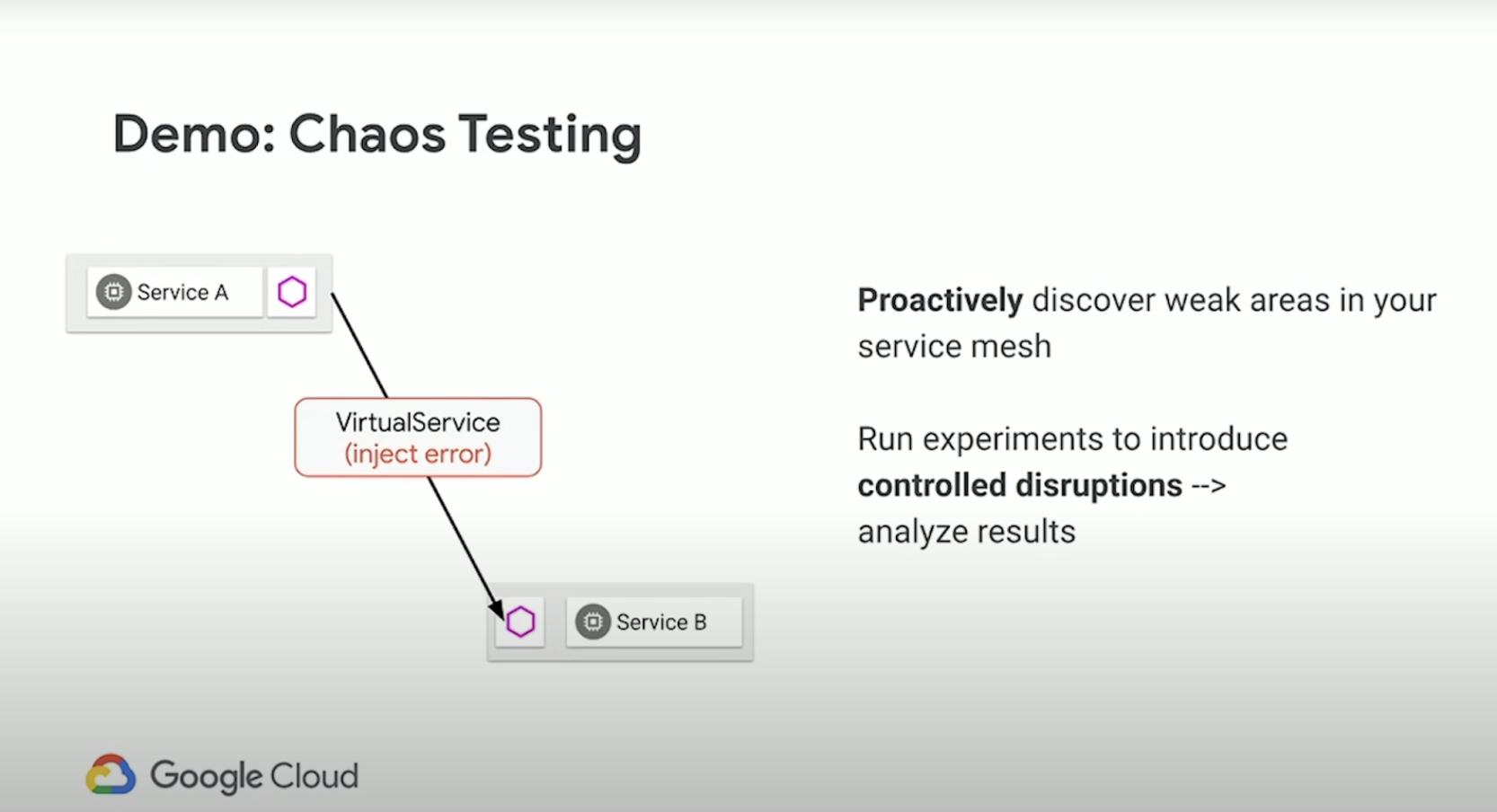

Fault Injection

Fault injection is an incredibly powerful way to test and build reliable distributed applications. Companies like Netflix have taken this to the extreme, coining the term “chaos engineering” to describe the practice of injecting faults into running production systems to ensure that the systems are built to be reliable and tolerant of environmental failures. Istio allows you to configure faults for HTTP traffic, injecting arbitrary delays or returning specific response codes (e.g., 500) for some percentage of traffic.

Service Mesh Architecture

Extra / Misc

SNI

Server Name Indication (SNI) is an extension to the Transport Layer Security (TLS) computer networking protocol by which a client indicates which hostname it is attempting to connect to at the start of the handshaking process. This allows a server to present one of multiple possible certificates on the same IP address and TCP port number and hence allows multiple secure (HTTPS) websites (or any other service over TLS) to be served by the same IP address without requiring all those sites to use the same certificate. It is the conceptual equivalent to HTTP/1.1 name-based virtual hosting, but for HTTPS. This also allows a proxy to forward client traffic to the right server during TLS/SSL handshake. The desired hostname is not encrypted in the original SNI extension, so an eavesdropper can see which site is being requested.

X-Forwarded-For Header

Applications rely on reverse proxies to forward client attributes in a request, such as X-Forward-For header. However, due to the variety of network topologies that Istio can be deployed in, you must set the numTrustedProxies to the number of trusted proxies deployed in front of the Istio gateway proxy, so that the client address can be extracted correctly. This controls the value populated by the ingress gateway in the X-Envoy-External-Address header which can be reliably used by the upstream services to access client’s original IP address. Note that all proxies in front of the Istio gateway proxy must parse HTTP traffic and append to the X-Forwarded-For header at each hop. If the number of entries in the X-Forwarded-For header is less than the number of trusted hops configured, Envoy falls back to using the immediate downstream address as the trusted client address.

Istio-Ingress Gateway As PASSTHROUGH

Because the LB is an internal L4 Lb, and setup as PASSTHROUGH using the SNI, it knows which service the client is trying to access and which certificate corresponds with that service with an extension to TLS called Server Name Indication (SNI). Basically, when an HTTPS connection is created, the client first identifies which service it’s trying to reach using the ClientHello part of the TLS handshake. Istio’s gateway (Envoy, specifically) implements SNI on TLS, which is how it can present the correct cert and route to the correct service. With the setup now, as PASSTHROUGH, we basically have it set up to accept and route non-HTTP traffic, and route HTTPS traffic and present certain certificates depending on the SNI hostname, and so the result of the combination of these two capabilities: routing TCP traffic based on SNI hostname without terminating the traffic on the Istio ingress gateway. All the gateway will do is inspect the SNI headers and route the traffic to the specific backend, which will then terminate the TLS connection. The connection will “pass through” the gateway and be handled by the actual service, not the gateway.

Written: March 26, 2022