Envoy discovers its various dynamic resources via the filesystem or by querying one or more management servers. Collectively, these discovery services and their corresponding APIs are referred to as xDS.

For typical HTTP routing scenarios, the core resource types for the client’s configuration are:- Listener

- RouteConfiguration

- Cluster

- ClusterLoadAssignment

EDS

The Endpoint Discovery Service (EDS) API provides a more advanced mechanism by which Envoy can discover members of an upstream cluster. Layered on top of a static configuration, EDS allows an Envoy deployment to circumvent the limitations of DNS (maximum records in a response, etc.) as well as consume more information used in load balancing and routing (e.g., canary status, zone, etc.).

The endpoint discovery service is a xDS management server based on gRPC or REST-JSON API server used by Envoy to fetch cluster members. The cluster members are called “endpoints” in Envoy terminology. For each cluster, Envoy fetches the endpoints from the discovery service.

EDS is the preferred service discovery mechanism for a few reasons:- Envoy has explicit knowledge of each upstream host (vs. routing through a DNS resolved load balancer) and can make more intelligent load balancing decisions.

- Extra attributes carried in the discovery API response for each host inform Envoy of the host’s load balancing weight, canary status, zone, etc. These additional attributes are used globally by the Envoy mesh during load balancing, statistic gathering, etc.

CDS

The Cluster Discovery Service (CDS) API layers on a mechanism by which Envoy can discover upstream clusters used during routing. Envoy will gracefully add, update, and remove clusters as specified by the API. This API allows implementors to build a topology in which Envoy does not need to be aware of all upstream clusters at initial configuration time.

Typically, when doing HTTP routing along with CDS (but without route discovery service), implementors will make use of the router’s ability to forward requests to a cluster specified in an HTTP request header. Although it is possible to use CDS without EDS by specifying fully static clusters, we recommend still using the EDS API for clusters specified via CDS. Internally, when a cluster definition is updated, the operation is graceful. However, all existing connection pools will be drained and reconnected. EDS does not suffer from this limitation. When hosts are added and removed via EDS, the existing hosts in the cluster are unaffected.

RDS

The Route Discovery Service (RDS) API layers on a mechanism by which Envoy can discover the entire route configuration for an HTTP connection manager filter at runtime. The route configuration will be gracefully swapped in without affecting existing requests. This API, when used alongside EDS and CDS, allows implementors to build a complex routing topology (traffic shifting, blue/green deployment, etc).

LDS

The Listener Discovery Service (LDS) API layers on a mechanism by which Envoy can discover entire listeners at runtime. This includes all filter stacks, up to and including HTTP filters with embedded references to RDS. Adding LDS into the mix allows almost every aspect of Envoy to be dynamically configured. Hot restart should only be required for very rare configuration changes (admin, tracing driver, etc.), certificate rotation, or binary updates.

SDS

The Secret Discovery Service (SDS) API layers on a mechanism by which Envoy can discover cryptographic secrets (certificate plus private key, TLS session ticket keys) for its listeners, as well as configuration of peer certificate validation logic (trusted root certs, revocations, etc).

NDS

Name Discovery Service: https://github.com/istio/istio/blob/master/pilot/pkg/xds/nds.go

NdsGenerator generates config for Nds i.e. Name Discovery Service. Istio agents send NDS requests to istiod and istiod responds with a list of services and their associated IPs (including service entries).

The agent then updates its internal DNS based on this data. If DNS capture is enabled in the pod the agent will capture all DNS requests and attempt to resolve locally before forwarding to upstream dns servers.

This is a special purpose load balancer that can only be used with an original destination cluster. Upstream host is selected based on the downstream connection metadata, i.e., connections are opened to the same address as the destination address of the incoming connection was before the connection was redirected to Envoy. New destinations are added to the cluster by the load balancer on-demand, and the cluster periodically cleans out unused hosts from the cluster. No other load balancing policy can be used with original destination clusters.

Original destination cluster can be used when incoming connections are redirected to Envoy either via an iptables REDIRECT or TPROXY target or with Proxy Protocol. In these cases requests routed to an original destination cluster are forwarded to upstream hosts as addressed by the redirection metadata, without any explicit host configuration or upstream host discovery. Connections to upstream hosts are pooled and unused hosts are flushed out when they have been idle longer than cleanup_interval, which defaults to 5000ms. If the original destination address is not available, no upstream connection is opened. Envoy can also pickup the original destination from a HTTP header. Original destination service discovery must be used with the original destination load balancer.

Static is the simplest service discovery type. The configuration explicitly specifies the resolved network name (IP address/port, unix domain socket, etc.) of each upstream host.

When using strict DNS service discovery, Envoy will continuously and asynchronously resolve the specified DNS targets. Each returned IP address in the DNS result will be considered an explicit host in the upstream cluster. This means that if the query returns three IP addresses, Envoy will assume the cluster has three hosts, and all three should be load balanced to. If a host is removed from the result Envoy assumes it no longer exists and will drain traffic from any existing connection pools. Consequently, if a successful DNS resolution returns 0 hosts, Envoy will assume that the cluster does not have any hosts.

Note that Envoy never synchronously resolves DNS in the forwarding path. At the expense of eventual consistency, there is never a worry of blocking on a long running DNS query.

If a single DNS name resolves to the same IP multiple times, these IPs will be de-duplicated. If multiple DNS names resolve to the same IP, health checking will not be shared. This means that care should be taken if active health checking is used with DNS names that resolve to the same IPs: if an IP is repeated many times between DNS names it might cause undue load on the upstream host.

If respect_dns_ttl is enabled, DNS record TTLs and dns_refresh_rate are used to control DNS refresh rate. For strict DNS cluster, if the minimum of all record TTLs is 0, dns_refresh_rate will be used as the cluster’s DNS refresh rate. dns_refresh_rate defaults to 5000ms if not specified. The dns_failure_refresh_rate controls the refresh frequency during failures, and, if not configured, the DNS refresh rate will be used. DNS resolving emits cluster statistics fields update_attempt, update_success and update_failure.

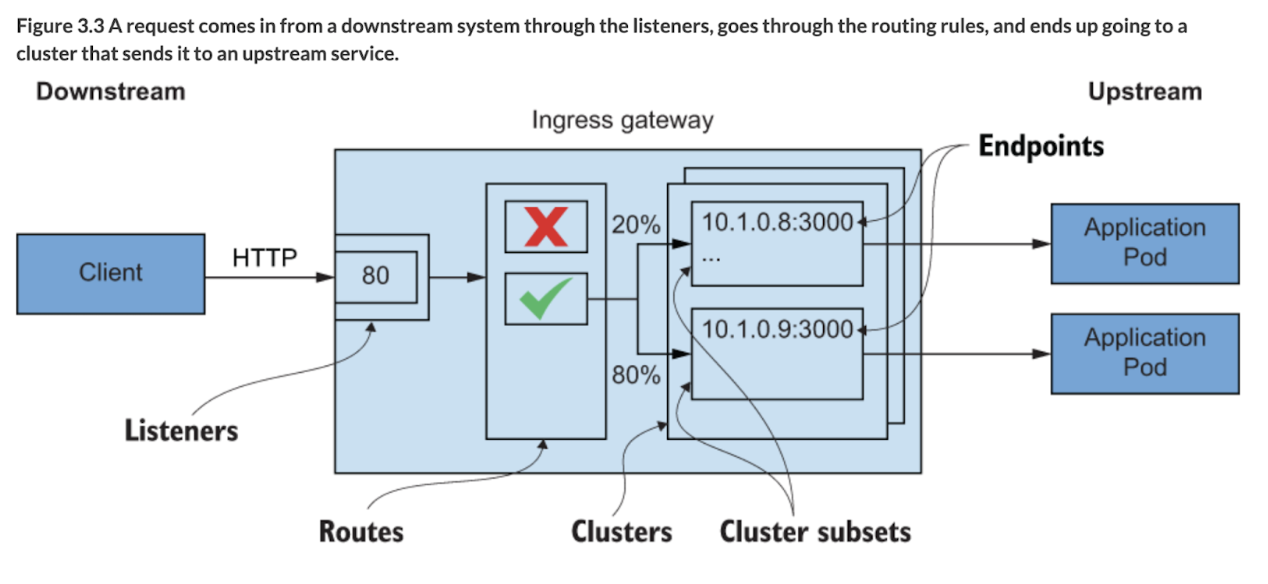

- Listeners — Expose a port to the outside world to which applications can connect. For example, a listener on port 80 accepts traffic and applies any configured behavior to that traffic.

- Routes — Routing rules for how to handle traffic that comes in on listeners. For example, if a request comes in and matches /catalog, direct that traffic to the catalog cluster.

- Clusters — Specific upstream services to which Envoy can route traffic. For example, catalog-v1 and catalog-v2 can be separate clusters, and routes can specify rules about how to direct traffic to either v1 or v2 of the catalog service.

This is a conceptual understanding of what Envoy does for L7 traffic. Envoy uses terminology similar to that of other proxies when conveying traffic directionality. For example, traffic comes into a listener from a downstream system. This traffic is routed to one of Envoy’s clusters, which is responsible for sending that traffic to an upstream system. Traffic flows through Envoy from downstream to upstream.

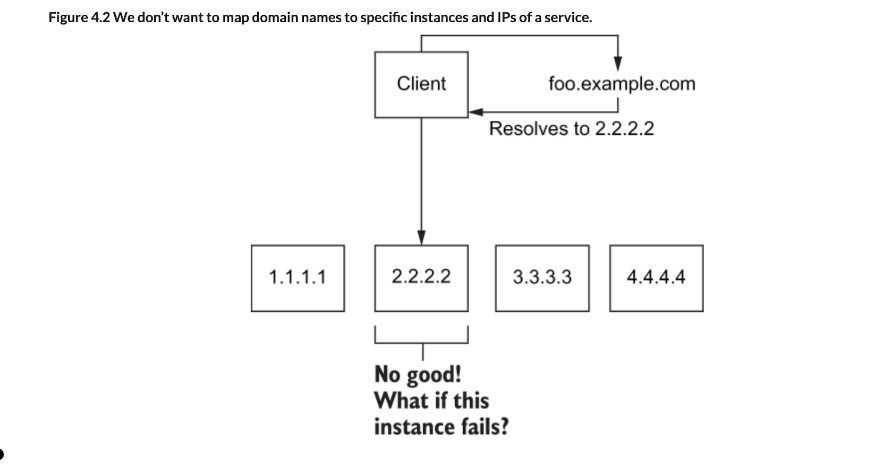

Taking an example from the book "Istio in Action", let’s say we have a service that we wish to expose at api.istioinaction.io/v1/products for external systems to get a list of products in our catalog. When our client tries to query that endpoint, the client’s networking stack first tries to resolve the api.istioinaction.io domain name to an IP address. This is done with DNS servers.

- The networking stack queries the DNS servers for the IP addresses for a particular hostname.

- So the first step in getting traffic into our network is to map our service’s IP to a hostname in DNS.

- For a public address, we could use a service like Amazon Route 53 or Google Cloud DNS and map a domain name to an IP address.

- In our own datacenters, we’d use internal DNS servers to do the same thing.

- But to what IP address should we map the name?

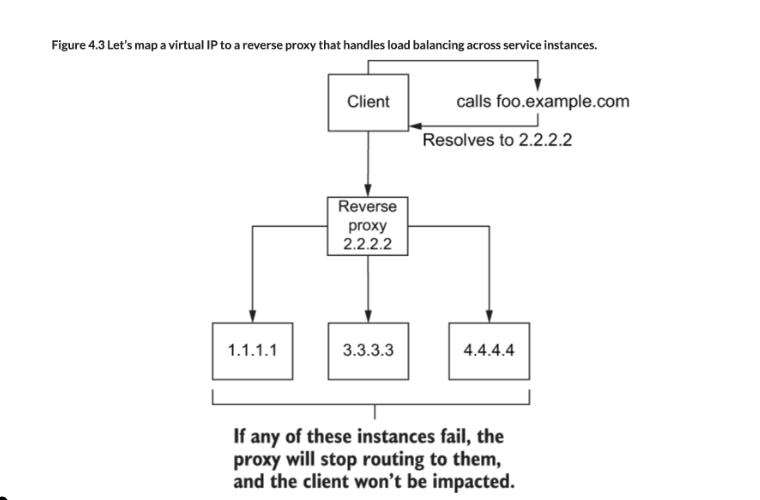

The above diagram shows how mapping the domain name to a virtual IP address that represents our service and forwards traffic to our actual service instances, provides us with higher-availability and flexibility. The virtual IP is bound to a type of ingress point known as a reverse proxy. The reverse proxy is an intermediary component that’s responsible for distributing requests to backend services and does not correspond to any specific service. The reverse proxy can also provide capabilities like load balancing so requests don’t overwhelm any one backend.

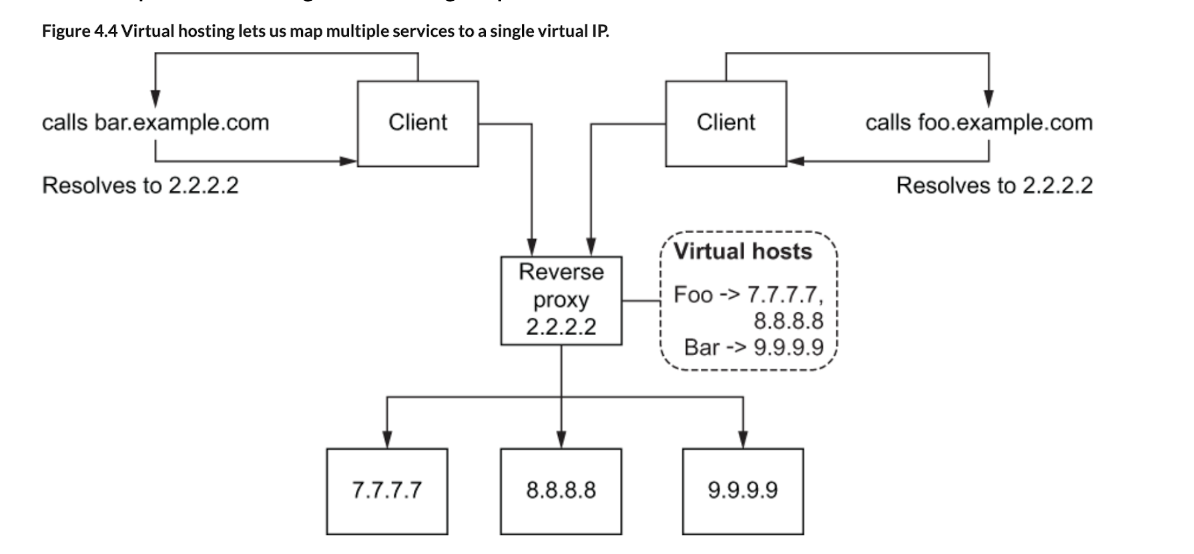

- This would mean requests for both hostnames would end up going to the same virtual IP, and thus the same ingress reverse proxy would route the request.

- If the reverse proxy was smart enough, it could use the Host HTTP header to further delineate which requests should go to which group of services.

Hosting multiple different services at a single entry point is known as virtual hosting.

We need a way to decide which virtual host group a particular request should be routed to.

Hosting multiple different services at a single entry point is known as virtual hosting.

We need a way to decide which virtual host group a particular request should be routed to.

- With HTTP/1.1, we can use the Host header; with HTTP/2, we can use the :authority header.

- With TCP connections, we can rely on Server Name Indication (SNI) with TLS.

The important thing to note is that the edge ingress functionality we see in Istio uses virtual IP routing and virtual hosting to route service traffic into the cluster.

Some of the definitions are slightly contentious within the industry, however they are how Envoy uses them throughout the documentation and codebase.

A downstream host connects to Envoy, sends requests, and receives responses.

An upstream host receives connections and requests from Envoy and returns responses.

A listener is a named network location (e.g., port, unix domain socket, etc.) that can be connected to by downstream clients. Envoy exposes one or more listeners that downstream hosts connect to.

A cluster is a group of logically similar upstream hosts that Envoy connects to. Envoy discovers the members of a cluster via service discovery. It optionally determines the health of cluster members via active health checking. The cluster member that Envoy routes a request to is determined by the load balancing policy.

An entity capable of network communication (application on a mobile phone, server, etc.). In the documentation a host is a logical network application. A physical piece of hardware could possibly have multiple hosts running on it as long as each of them can be independently addressed.

A group of hosts that coordinate to provide a consistent network topology. In the documentation, an “Envoy mesh” is a group of Envoy proxies that form a message passing substrate for a distributed system comprised of many different services and application platforms.

Out of band realtime configuration system deployed alongside Envoy. Configuration settings can be altered that will affect operation without needing to restart Envoy or change the primary configuration.

Importnant to note, this is from the Istio perspectve, however it is all Envoy under the hood. Istio runs on top of Envoy.

As the data-plane service proxy, Envoy intercepts all incoming and outgoing requests at runtime (as traffic flows through the service mesh).- This interception is done transparently via iptables rules or a Berkeley Packet Filter (BPF) program that routes all network traffic, in and out through Envoy.

- Envoy inspects the request and uses the request’s hostname, SNI, or service virtual IP address to determine the request’s target (the service to which the client is intending to send a request).

- Envoy applies that target’s routing rules to determine the request’s destination (the service to which the service proxy is actually going to send the request).

- Having determined the destination, Envoy applies the destination’s rules.

- Destination rules include load-balancing strategy, which is used to pick an endpoint (the endpoint is the address of a worker supporting the destination service).

- Services generally have more than one worker available to process requests. Requests can be balanced across those workers.

- Finally, Envoy forwards the intercepted request to the endpoint.

A number of items of note are worth further illumination. First, it’s desirable to have your applications speak cleartext (communicate without encryption) to the sidecarred service proxy and let the service proxy handle transport security.

For example, your application can speak HTTP to the sidecar and let the sidecar handle the upgrade to HTTPS. This allows the service proxy to gather L7 metadata about requests, which allows Istio to generate L7 metrics and manipulate traffic based on L7 policy. Without the service proxy performing TLS termination, Istio can generate metrics for and apply policy on only the L4 segment of the request, restricting policy to contents of the IP packet and TCP header (essentially, a source and destination address and port number).

Second, we get to perform client-side load balancing rather than relying on traditional load balancing via reverse proxies. Client-side load balancing means that we can establish network connections directly from clients to servers while still maintaining a resilient, well-behaved system. That in turn enables more efficient network topologies with fewer hops than traditional systems that depend on reverse proxies.

Typically, Pilot (the Istio control plane) has detailed endpoint information about services in the registry, which it pushes directly to the service proxies. So, unless you configure the service proxy to do otherwise, at runtime it selects an endpoint from a static set of endpoints pushed to it by Pilot and does not perform dynamic address resolution (e.g., via DNS) at runtime. Therefore, the only things Istio can route traffic to are hostnames in Istio’s service registry. There is an installation option in newer versions of Istio (set to “off” by default in 1.1) that changes this behavior and allows Envoy to forward traffic to unknown services that are not modeled in Istio, so long as the application provides an IP address.

Applications rely on reverse proxies to forward client attributes in a request, such as X-Forward-For header. However, due to the variety of network topologies that Istio can be deployed in, you must set the numTrustedProxies to the number of trusted proxies deployed in front of the Istio gateway proxy, so that the client address can be extracted correctly. This controls the value populated by the ingress gateway in the X-Envoy-External-Address header which can be reliably used by the upstream services to access client’s original IP address. Note that all proxies in front of the Istio gateway proxy must parse HTTP traffic and append to the X-Forward-For header at each hop. If the number of entries in the X-Forward-For header is less than the number of trusted hops configured, Envoy falls back to using the immediate downstream address as the trusted client address.

Server Name Indication (SNI) is an extension to the Transport Layer Security (TLS) computer networking protocol by which a client indicates which hostname it is attempting to connect to at the start of the handshaking process. This allows a server to present one of multiple possible certificates on the same IP address and TCP port number and hence allows multiple secure (HTTPS) websites (or any other service over TLS) to be served by the same IP address without requiring all those sites to use the same certificate. It is the conceptual equivalent to HTTP/1.1 name-based virtual hosting, but for HTTPS. This also allows a proxy to forward client traffic to the right server during TLS/SSL handshake. The desired hostname is not encrypted in the original SNI extension, so an eavesdropper can see which site is being requested.

Encrypted Client Hello (ECH) is a TLS 1.3 protocol extension that enables encryption of the whole Client Hello message, which is sent during the early stage of TLS 1.3 negotiation. ECH encrypts the payload with a public key that the relying party (a web browser) needs to know in advance, which means ECH is most effective with large CDNs known to browser vendors in advance.

- xDS: https://www.envoyproxy.io/docs/envoy/latest/intro/arch_overview/operations/dynamic_configuration

- SNI (Server name Indication): https://www.envoyproxy.io/docs/envoy/latest/start/sandboxes/tls-sni

- Service Discovery: https://www.envoyproxy.io/docs/envoy/latest/intro/arch_overview/upstream/service_discovery

- Clusters: https://www.envoyproxy.io/docs/envoy/latest/api-v3/clusters/clusters

- Response Flags: https://blog.getambassador.io/understanding-envoy-proxy-and-ambassador-http-access-logs-fee7802a2ec5

Date Written: June 20, 2024